Much as in The Sorcerer’s Apprentice, we are eager to offload drudgery onto others, and with the advent of algorithms and their much smarter AI siblings, we have found a perfect new broom to carry the water. [1] Of course, we would also prefer to maintain control. However, as Spiderman says, “with great power comes great responsibility,” or in the case of autonomous cars, great blame.

“Chain of blame”

When AI errs and delivers bad outcomes, one might blame the AI testers, programmers, manufacturers, lawmakers, or even the users. A new study examines how individuals assess who is to blame in a series of scenarios involving autonomous cars, surgical robots, medical diagnostics, and financial planning. What they found is worthy of our attention.

Do People Prefer the existence of a manual mode?

Ninety-seven online American participants, aged roughly 35 and 52% female, were given the choice of cars equipped with “full autonomous ability without driver supervision.” Those systems had a manual override for a 10% greater cost but were otherwise identical. Given that autonomous cars have a better driving record, reach destinations sooner, and have less wear and tear than human-controlled vehicles, which car would they prefer?

“Regardless of whether people currently own cars with autonomous features, most people want AVs to have a manual mode, even if the autonomous mode is much better at driving, and adding the manual mode is more expensive.” [emphasis added]

Does manual mode affect blame and culpability?

One hundred thirty-six online Norwegian participants, roughly age 39 and two-thirds female, were given the following scenario. In 2035, an individual purchased a fully autonomous car, safer than human drivers. “The car accidentally swerved into traffic and crashed into another car, destroying both cars and severely hurting both drivers.” Half of the participants were told that the cars were only autonomous, and the other half were told that a manual mode was possible; however, the human driver had chosen the autonomous mode.

The researchers used a 7-point (e.g., no anger to very angry) scale to assess the car owners:

- perceived moral responsibility

- deservingness of criticism

- deservingness of punishment

- their anger towards the car-owner

- the liability of both the car owner and the car manufacturer for the damages suffered by the crash victims

“Overall, the human agent was condemned more for the crash with the existence of manual mode than without manual mode.”

While the manufacturer was found “more liable” than the human agent, the manufacturer’s liability decreased with the presence of manual mode. The findings were identical in a subset of participants who had practical knowledge of autonomous cars, because they owned cars “with some capacity for autonomous driving,” i.e., Teslas.

Are the findings applicable in other domains?

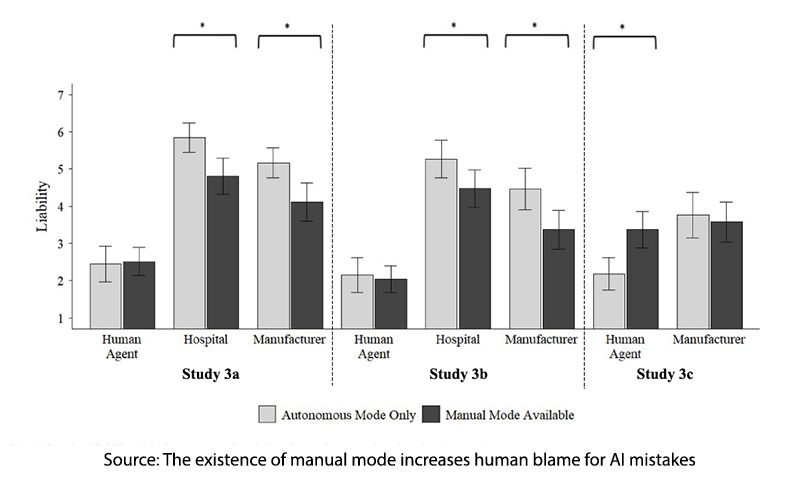

The researchers conducted similar online surveys with Norwegian participants, roughly 35-40 and generally half female, in the domains of robotic surgery, interpretation of X-rays, and financial decision-making.

In each instance, a vignette described an autonomous program that did or did not have a manual override that went on to cause a grievous death or bankruptcy with the same Likert assessments of blame. [2]

In each case, greater liability was assessed to the manufacturer or hospital than to the human operator; however, that liability was lessened in the presence of manual override by humans.

“Taken together, the evidence … suggest[s] that people blame humans more for AI mistakes when the AI system had a manual mode. The results further suggest that a manual mode reduces how much people blame the manufacturer and the AI itself for mistakes.”

Actio libera in causa -"action free in its cause."

This legal principle holds individuals accountable for offenses if they willingly put themselves in situations that lead to the crime, even if they didn't intend criminal actions. Impaired driving falls under this principle, as does not being able to claim self-defense when using lethal force in a fight you start.

Building on Actio Libera in Causa, the researchers considered what might explain the heightened blame attributed to humans. More specifically,

- Perceived causation directly links a human’s actions to the outcome, where the manual mode “heightens the belief that the agent caused the accident.”

- Counterfactual cognition is an indirect linkage based on how different actions could have altered the outcome.

For a drunken driver, getting behind the wheel while impaired is a perceived causation, while not calling an Uber when given the opportunity is a counterfactual cognition. [3] In liability cases involving airplane autopilots, humans have been held liable when they used the autopilot (perceived causation) and did not use the autopilot (counterfactual cognition).

An online survey of 454 Americans was given a scenario involving a fully autonomous car that mistakenly injured a child. As before, some cars were solely autonomous, while others had an override. However, the participants were also informed that in one instance, AI is equally as likely to err as humans, and in the other, AI is less likely to err. They found:

“The existence of manual mode increased the perceived causal role of the human agent and the counterfactual cognition of the role of the human agent, which both are associated with increased attributed blame to the human. …Our results are consistent with the principle of Actio Libera in Causa and with research on vicarious blame, such that people are perceived as responsible and blameworthy for a mistake that they did not commit, but that they unintentionally allowed to happen and could have prevented …” [emphasis added]

Moreover, even in the presence of superior AI, the humans were found liable. As the researchers write,

“Our studies suggest that even though the AI is generally or statistically safer, participants seem to perceive that the human agent could have done a better job in that specific moment to avoid an accident (i.e., counterfactual cognition), and even if the accident could not have been prevented, the human agent simply having more control over the situation (i.e., perceived causation) can lead to increased blame.”

Section 230 and AI

There is increasing evidence that the algorithms driving social media are harming our children and, if nothing else, political discourse. 76% of US public schools prohibit cell phones, and 13 states have implemented bans. However, it has taken ten years for the harmful impacts of social media on our children to become apparent. Our political discourse has coarsened during the same time frame and decayed by fake or misleading news from both sides of the aisle.

Yet in the chain of blame Section 230 stands as a shield to AI’s developers, the platform. As a reminder, Section 230 is a portion of the Communication Act that specifically shields social media platforms for being held responsible for their content as they are considered publishers, not authors. But the algorithms they develop, curate what we see - their role is not passively posting. That seems to go against the legal principle of Actio libera in causa. While the study found that manufacturers were most liable, it also demonstrated that the manual override, the choice to engage or not, was a powerful driver of blame. It is no wonder that when platforms invoke the shield of Section 230, the users of social media shoulder the additional blame.

AI is a far more powerful form of algorithm, and the urgency of an industry built upon “move fast and break things” and “it is better to apologize than ask permission” has mesmerized our elected officials into a regulatory stupor.

The last word goes to the researchers.

“The beauty of AI is that it allows humanity to outsource tasks, but it is human nature to want ultimate control. We are happy to delegate tasks to autonomous machines so long as we can—in principle—regain control of those tasks. However, maintaining a manual mode often only gives us the illusion of control because we often lack the time or ability to truly execute the task. … Sometimes people want something that isn’t good for us.”

[1] Like many of you, I was raised on the Disney version of The Sorcerer’s Apprentice, but it comes from a poem by von Goethe written in 1797. The sorcerer leaves his apprentice to fetch water, but the apprentice enchants a broom to do the work, using magic in which he is not fully trained. Only the sorcerer’s return ends the ensuing flood—a special thank you to Yuval Harari and his new book Nexus for making the connection for me.

[2] “The autonomous robot surgeon was operating to remove a cancerous prostate gland, it mistook a healthy artery for a tumor and tried to remove the artery, which led to the death of the patient. … An AI radiologist misperceived an early-stage cancerous tumor as healthy tissue, eventually leading to the death of the patient. … A human financial planner takes over a widow’s account and leaves it to the AI stock-picking robot. The robot decides to invest all of the widow’s money in one company that declares bankruptcy hours later, losing the widow’s entire fortune.”

[3] The role of counterfactual cognition or vicarious blame, where a parent is held liable for their children because they are considered to be obligated to supervise them, has been demonstrated in the recent school shooting at Apalachee High School in Winder, Georgia, where the father has been charged with manslaughter and murder.

Source: The existence of manual mode increases human blame for AI mistakes Cognition DOI:10.1016/j.cognition.2024.105931